kubectl apply -f whatever-the-fuck-it-is.yaml

apply -f the story of our lives. Most of us use it without knowing what it does and how it does that. At first glance, it looks straightforward. Just take the input file and upsert those changes to the API server. But how are those changes tracked, what the heck is --server-side and what is this all about? Let's find out!

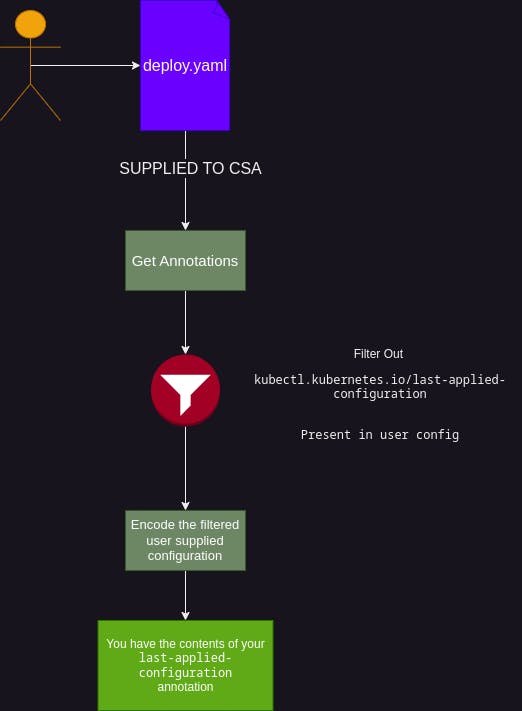

Client-Side apply (CSA)

The way CSA tracks user changes is with the help of kubectl.kubernetes.io/last-applied-configuration annotation. As the name suggests, this annotation only tracks the last-applied-configuration. Anything that you didn't apply won't get reflected in this annotation. An example is better than 1000 words, see it yourself.

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

EOF

# kubectl apply view-last-applied deploy nginx

kubectl get deploy nginx -o jsonpath="{.metadata.annotations.kubectl\.kubernetes\.io/last-applied-configuration}" | jq

Output:

{

"apiVersion": "apps/v1",

"kind": "Deployment",

"metadata": {

"annotations": {},

"labels": {

"app": "nginx"

},

"name": "nginx",

"namespace": "default"

},

"spec": {

"selector": {

"matchLabels": {

"app": "nginx"

}

},

"template": {

"metadata": {

"labels": {

"app": "nginx"

}

},

"spec": {

"containers": [

{

"image": "nginx",

"name": "nginx"

}

]

}

}

}

}

Now a few things to notice in the contents of the last-applied-configuration annotation.

It only contains data about the configuration you applied (duh), it does not contain any other data like the count of

replicas, etc.An empty

annotationsmap, why though? I do not see any other empty fields 🤔This is because annotations are specially managed by

applywhen using CSA. This makes sense too, annotations need to be specially handled becausekubectl.kubernetes.io/last-applied-configurationis also an annotation. Here is the basic flow

You cannot set

last-applied-configurationannotation manually, it is dropped by the logic of CSA.Now yeah-yeah, if it is empty, it can be silently dropped from the logic to not have an empty map encoded but this is the way Kubernetes authors have done it. Sort of makes sense too, to remind

annotationsin user-config is handled specially.

Now let's try to modify the deployment without using apply.

kubectl scale deploy nginx --replicas=2

# kubectl apply view-last-applied deploy nginx

kubectl get deploy nginx -o jsonpath="{.metadata.annotations.kubectl\.kubernetes\.io/last-applied-configuration}" | jq

Output:

{

"apiVersion": "apps/v1",

"kind": "Deployment",

"metadata": {

"annotations": {},

"labels": {

"app": "nginx"

},

"name": "nginx",

"namespace": "default"

},

"spec": {

"selector": {

"matchLabels": {

"app": "nginx"

}

},

"template": {

"metadata": {

"labels": {

"app": "nginx"

}

},

"spec": {

"containers": [

{

"image": "nginx",

"name": "nginx"

}

]

}

}

}

}

⬆️ No mention of replicas

This is the same output we encountered earlier, nothing has changed in the last-applied-configuration annotation because as the name suggests, only last-applied changes are tracked.

There are 3 things required whenever deciding what changes have to be made to the object.

User Supplied Configuration

last-applied-configurationannotationLive Object Configuration (Stored in

etcd)

For Deleting fields:

(last-applied-configuration annotation) - (User Supplied Configuration) i.e. delete fields that are present in last-applied-configuration annotation but not present in the user-supplied manifest.

For Adding/Updating Fields:

|(User Supplied Configuration) - (Live Object Configuration (Stored in etcd))| i.e. The fields present in the user-supplied configuration file whose values don't match the live configuration.

You get the basic idea, now how this merge patch is created for different types of fields is documented awesomely at https://kubernetes.io/docs/tasks/manage-kubernetes-objects/declarative-config/#how-different-types-of-fields-are-merged.

Yeahhhh, that was CSA, but there is a small little problem.

Dev A: apply a manifest (creates an object).

Dev B: Sees a problem with that manifest, creates a local copy, updates and apply the manifest.

Dev A: Notices some other problem, updates local copy, apply the manifest again. Accidentally reverts changes made by Dev B.

Yikes! 😬

Server-Side apply (SSA)

Yikes! 😬

That scream is still echoing in my ears, we need to do something. How about a centralized system that has information about each field and only lets, a few selected (managers) to make changes to that particular field. Wellll, I am sort of a genius, 😎 🤏 no need to praise me.

Looks like there are more geniuses out there and folks have had this idea before, they implemented it and have even named it, it is called server-side apply.

Server-side apply works by associating managers with fields. Whenever a user tries changing/setting the value of a field with POST, PUT, or non-apply PATCH, that user directly becomes the manager of the fields the user specified. Let's see what these managers look like.

cat <<EOF | kubectl apply --server-side -f -

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

EOF

kubectl get deploy nginx -o yaml --show-managed-fields=true

Output:

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

creationTimestamp: "2023-06-22T08:16:52Z"

generation: 1

labels:

app: nginx

managedFields:

- apiVersion: apps/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:labels:

f:app: {}

f:spec:

f:selector: {}

f:template:

f:metadata:

f:labels:

f:app: {}

f:spec:

f:containers:

k:{"name":"nginx"}:

.: {}

f:image: {}

f:name: {}

manager: kubectl

operation: Apply

time: "2023-06-22T08:21:12Z"

- apiVersion: apps/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:deployment.kubernetes.io/revision: {}

f:status:

f:availableReplicas: {}

f:conditions:

.: {}

k:{"type":"Available"}:

.: {}

f:lastTransitionTime: {}

f:lastUpdateTime: {}

f:message: {}

f:reason: {}

f:status: {}

f:type: {}

k:{"type":"Progressing"}:

.: {}

f:lastTransitionTime: {}

f:lastUpdateTime: {}

f:message: {}

f:reason: {}

f:status: {}

f:type: {}

f:observedGeneration: {}

f:readyReplicas: {}

f:replicas: {}

f:updatedReplicas: {}

manager: kube-controller-manager

operation: Update

subresource: status

time: "2023-06-22T08:16:56Z"

name: nginx

namespace: default

resourceVersion: "38318"

uid: 6a93bb30-f068-45d8-b12c-547b2171a114

spec:

...

In the output above, we can see there are two field managers i.e. kubectl and kube-controller-manager. Those weird looking f: and k: things are field and key:

From https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.27/#fieldsv1-v1-meta:

FieldsV1 stores a set of fields in a data structure like a Trie, in JSON format. Each key is either a '.' representing the field itself, and will always map to an empty set, or a string representing a sub-field or item. The string will follow one of these four formats: 'f:<name>', where <name> is the name of a field in a struct, or key in a map 'v:<value>', where <value> is the exact json formatted value of a list item 'i:<index>', where <index> is position of a item in a list 'k:<keys>', where <keys> is a map of a list item's key fields to their unique values If a key maps to an empty Fields value, the field that key represents is part of the set. The exact format is defined in sigs.k8s.io/structured-merge-diff

Now only the managers can change the value of those fields directly with Apply (PATCH with content type application/apply-patch+yaml). If any other actor tries to apply to these fields, it will result in a conflict.

This solves our above-mentioned Yikes problem. When Dev B will try to modify fields, which would result in a conflict, Dev B can then either take over the ownership (--force-conflicts=true) of that field or sync up with Dev A. Either way, it won't result in a dev accidentally overriding someone else's changes.

There are a few gotchas or things you should know about SSA.

Not all fields in the live configuration are managed by some managers. For eg- see above, there is no manager for

spec.template.spec.dnsPolicy, it gets defaulted toClusterFirst.❗❗Only

Apply(PATCHwith content typeapplication/apply-patch+yaml) result in a conflict, other updates will directly take over the ownership of the field. The role of managers is to prevent users from accidentally applying older local versions. Other types of updates are usually done by controllers.❗❗If a field was maintained by some

manager, and thatmanagerlater stops managing it and no othermanageris managing it, it will get reset to the default value. Go read more about it at https://kubernetes.io/docs/reference/using-api/server-side-apply/#transferring-ownership, this is important GO-READ.Merge strategy for different types of fields.

Now let's try to cause some conflicts:

Let's change our deployment's (nginx in default namespace) local copy to manage replicas too:

cat <<EOF | kubectl apply --server-side -f -

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

spec:

replicas: 1 # We are now managing replicas too!

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

EOF

kubectl scale deploy nginx --replicas=5

kubectl get deploy nginx -o yaml --show-managed-fields=true

Output:

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

creationTimestamp: "2023-06-22T09:03:41Z"

generation: 3

labels:

app: nginx

managedFields:

- apiVersion: apps/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:labels:

f:app: {}

f:spec:

f:selector: {}

f:template:

f:metadata:

f:labels:

f:app: {}

f:spec:

f:containers:

k:{"name":"nginx"}:

.: {}

f:image: {}

f:name: {}

manager: kubectl

operation: Apply

time: "2023-06-22T09:03:41Z"

- apiVersion: apps/v1

fieldsType: FieldsV1

fieldsV1:

f:spec:

f:replicas: {}

manager: kubectl

operation: Update

subresource: scale

...

As you can see, the ownership of f:replicas has been transferred from:

manager: kubectl

operation: Apply

⬇️

manager: kubectl

operation: Update

subresource: scale

Now try applying the old apply config again:

cat <<EOF | kubectl apply --server-side -f -

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

spec:

replicas: 1 # We are now managing replicas too!

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

EOF

Output:

error: Apply failed with 1 conflict: conflict with "kubectl" with subresource "scale" using apps/v1: .spec.replicas

Please review the fields above--they currently have other managers. Here

are the ways you can resolve this warning:

* If you intend to manage all of these fields, please re-run the apply

command with the `--force-conflicts` flag.

* If you do not intend to manage all of the fields, please edit your

manifest to remove references to the fields that should keep their

current managers.

* You may co-own fields by updating your manifest to match the existing

value; in this case, you'll become the manager if the other manager(s)

stop managing the field (remove it from their configuration).

See https://kubernetes.io/docs/reference/using-api/server-side-apply/#conflicts

The operation failed with a conflict, as it was of type apply (PATCH with content type application/apply-patch+yaml) whereas $ kubectl scale was able to take over ownership directly as it was of PATCH with content type application/merge-patch+json.

Migrating from CSA -> SSA

Just apply the latest up-to-date local configuration using --server-side flag.

kubectl apply --server-side ...

The good news is that your kubectl.kubernetes.io/last-applied-configuration annotation is not dropped, instead, it is now maintained by:

- apiVersion: apps/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

f:kubectl.kubernetes.io/last-applied-configuration: {}

manager: kubectl-last-applied

operation: Apply

Benefits of SSA

There is a great article about the benefits of SSA and why you should be using it by Daniel Smith.

One point I would like to demonstrate about the benefits of SSA is that you can merge list items without doing a GET (which is done by $ kubectl patch).

Again example over words:

cat <<EOF | kubectl apply --server-side -f -

apiVersion: v1

kind: Pod

metadata:

labels:

run: test-pod

name: test-pod

spec:

containers:

- image: nginx

name: nginx

- image: redis

name: redis

EOF

Try patching the pod using JSON Merge Patch, RFC 7386.

echo "spec:

containers:

- image: nginx:alpine

name: nginx

- image: redis

name: redis" > patch.yaml

kubectl patch pod test-pod --patch-file patch.yaml -v=10 2>&1 | grep -E 'GET|PATCH'

Output:

I0622 15:36:43.050475 73605 round_trippers.go:466] curl -v -XGET -H "Accept: application/json" -H "User-Agent: kubectl/v1.26.0 (linux/amd64) kubernetes/b46a3f8" 'https://127.0.0.1:42205/api/v1/namespaces/default/pods/test-pod'

I0622 15:36:43.055683 73605 round_trippers.go:553] GET https://127.0.0.1:42205/api/v1/namespaces/default/pods/test-pod 200 OK in 5 milliseconds

I0622 15:36:43.056024 73605 round_trippers.go:466] curl -v -XPATCH -H "Accept: application/json" -H "Content-Type: application/strategic-merge-patch+json" -H "User-Agent: kubectl/v1.26.0 (linux/amd64) kubernetes/b46a3f8" 'https://127.0.0.1:42205/api/v1/namespaces/default/pods/test-pod?fieldManager=kubectl-patch'

I0622 15:36:43.068214 73605 round_trippers.go:553] PATCH https://127.0.0.1:42205/api/v1/namespaces/default/pods/test-pod?fieldManager=kubectl-patch 200 OK in 12 milliseconds

As you can see, it first makes a GET request to fetch the object and then PATCH the object. Whereas when we patch using SSA (on original pod state):

cat <<EOF | kubectl apply --server-side -v=10 2>&1 -f - | grep -E 'GET|PATCH'

apiVersion: v1

kind: Pod

metadata:

labels:

run: test-pod

name: test-pod

spec:

containers:

- image: nginx:alpine

name: nginx

- image: redis

name: redis

EOF

Output:

I0622 15:46:45.353417 76259 round_trippers.go:466] curl -v -XGET -H "Accept: application/com.github.proto-openapi.spec.v2@v1.0+protobuf" -H "User-Agent: kubectl/v1.26.0 (linux/amd64) kubernetes/b46a3f8" 'https://127.0.0.1:42205/openapi/v2?timeout=32s'

I0622 15:46:45.372004 76259 round_trippers.go:553] GET https://127.0.0.1:42205/openapi/v2?timeout=32s 200 OK in 18 milliseconds

00009d20 50 41 54 43 48 29 20 63 6f 6e 74 61 69 6e 69 6e |PATCH) containin|

0000c730 50 4f 53 54 2f 50 55 54 2f 50 41 54 43 48 29 20 |POST/PUT/PATCH) |

...

00301600 65 72 6e 65 74 65 73 20 50 41 54 43 48 20 72 65 |ernetes PATCH re|

I0622 15:46:45.459874 76259 round_trippers.go:466] curl -v -XPATCH -H "Content-Type: application/apply-patch+yaml" -H "User-Agent: kubectl/v1.26.0 (linux/amd64) kubernetes/b46a3f8" -H "Accept: application/json" 'https://127.0.0.1:42205/api/v1/namespaces/default/pods/test-pod?fieldManager=kubectl&fieldValidation=Strict&force=false'

I0622 15:46:45.473044 76259 round_trippers.go:553] PATCH https://127.0.0.1:42205/api/v1/namespaces/default/pods/test-pod?fieldManager=kubectl&fieldValidation=Strict&force=false 200 OK in 13 milliseconds

As you can see, the object we wanted to modify wasn't fetched and was directly updated using PATCH as our content type was application/apply-patch+yaml.

SSA comes with multi-fold benefits and fixes the limitations of CSA. While SSA also has its fair share of issues, it is definitely going in the right direction. There is an open issue for making SSA default which would be a big leap toward improving field management in Kubernetes.